Technology that interprets a combination of touchscreen and hand gestures accurately enough is still in the nascent stage. Researchers at MIT have revealed plans to unveil a prototype gestural computing LCD system on December 19, 2009 at Siggraph Asia. Microsoft’s SecondLight does deliver seamless transition from gestural to touchscreen interactions, but makes for bulky frames and expensive hardware requirements.

The Media Las system utilizes a number of liquid crystals like the average LCD with the addition of optical sensors behind it. The crystals act as lenses that display black and white patterns which are undetectable to the human eye in order to let light reach the sensors. Thus each 19-by-19 block of pixels is sub-divided into regular formations of monochromic rectangles in different sizes.

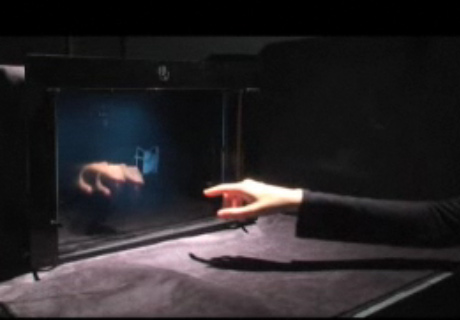

Simply put, light passing through an array of pinholes will strike a block of sensors. Each image being recorded from a different position, will deliver in-depth information about the object in front of the screen. Like similar existing touchscreen systems the prototype makes use of a camera placed some distance away from the screen to capture the images that pass through the black-and-white squares.

Though it hasn’t been established whether the algorithms which control the gestural computing system would function in natural settings, MIT researchers have been able to smoothly manipulate objects displayed on-screen with gestural and touchscreen interactions.